Culture

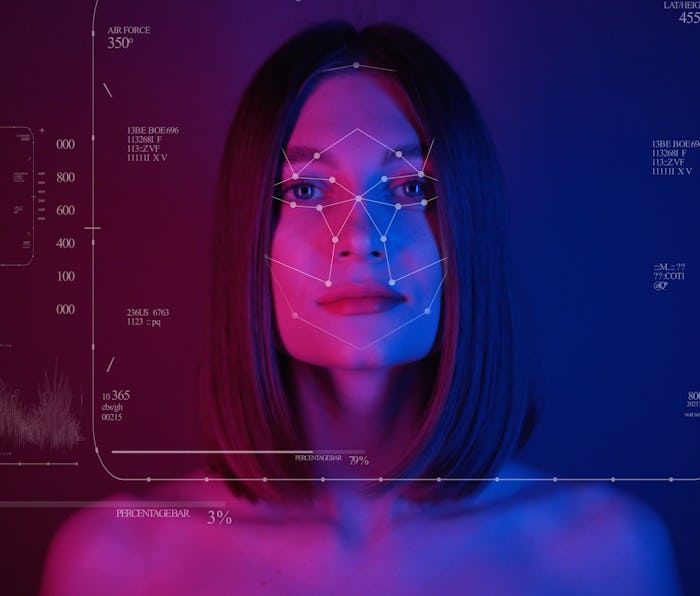

Facial recognition software has a gender bias problem

The issue of machine learning systems perpetuating bias, not reducing it, remains ever alive.

Google's cloud image recognition service is receiving fresh criticism for replicating real-world bias against women. Researchers found that Google's service applied triple the number of physical descriptions for women than it did for men. For images of men, terms like "official" and "business person" came up most frequently. For women — as is often the case in a society that tends to judge them based on their appearances — the system turned to terms like "chin" and "smile" more often than not.

In one comparison, researchers used the official photo of Steve Daines against Lucille Roybal-Allard. Daines, who is a Republican senator from Montana, and Roybal-Allard, who is a Democratic representative for California's 40th congressional district, are categorized in entirely contrasting terms, despite both being in formal wear, in front of a flag, and both having identical job roles: representing their states in Congress.

Daines was categorized with terms like "public speaking," "speech," "business," "suit," "speaker," "businessperson," "official," and "spokesperson." Meanwhile, Roybal-Allard was categorized with keywords like "television presenter," "smile," "spokesperson," "black hair," "chin," "hairstyle," and "person." The artificial intelligence places a curious amount of emphasis on the physical attributes of the female politician.

What researchers say — Postdoctoral researcher Carsten Schwemmer, one of the authors of the study, notes in the paper that the bias is two-fold. "Image search algorithms not only exhibit bias in identification — algorithms “see” men and women and different races — but bias in content, assigning high-powered female politicians labels related to lower social status," Schwemmer wrote.

The researchers analyzed how Google, Amazon, and Microsoft's image recognition systems worked. Their datasets pertained to male and female lawmakers, wherein the system tagged women in politics as "girl" and "beauty" while keeping to professional descriptors for their male counterparts by and large.

No such thing as a neutral algorithm — No algorithms are inherently neutral or socially indifferent to tropes and stereotypes. Biased inputs create biased outputs. Until we insist neutrality is part of the design and put in checks and measures to ensure it's realized, the biases of those people creating algorithms will inevitably filter into them.

It's a straightforward and rather obvious conclusion, but the makers of these systems have continued to downplay these worries. On top of gender bias, these facial recognition systems are receiving growing scrutiny for their use by law enforcement to target dissidents and activists despite a mountain of evidence that they're unreliable and tend to throw up higher numbers of false positives or wrongful identifications of people of color.

Earlier in 2020, Google said that it would put an end to gender detection in its facial recognition system in order to mitigate bias and false labels. Hopefully, its rivals follow its example. But from what 2020 has shown us, during the charged anti-police brutality protests and mass surveillance measures in the name of targeting COVID-19, this technology may well get worse before it gets better.