Don't Panic

The military assures us a lot of thought goes into building killer robots

The Department of Defense's new "responsible artificial intelligence" guidelines are a first for the government. But we’re not sleeping any better.

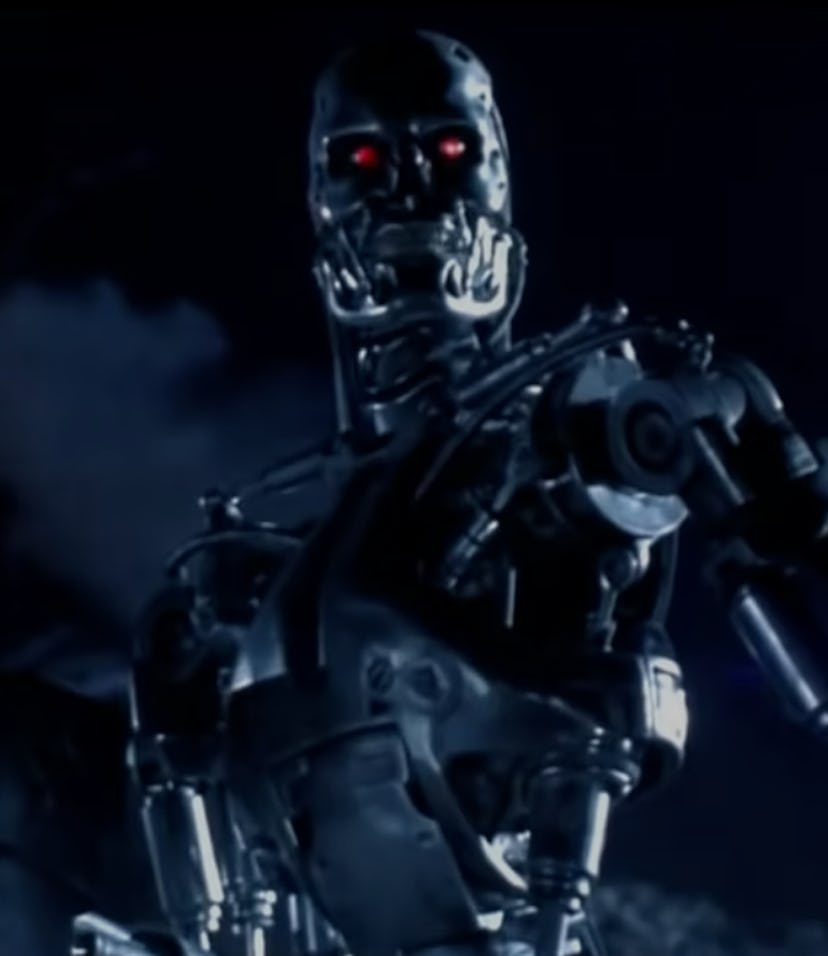

Listen up, everyone. The Department of Defense is tired of hearing your repeated gripes about hiring third-party contractors like Google, Amazon, and Microsoft to help design better death-dealing artificial intelligence systems for the forever wars. To shut everyone up once and for all, the DoD’s Defense Innovation Unit (DIU) has released its Responsible AI Guidelines for all outside partnerships hired to make their semi-sentient mechanical murder machines as ethical and thoughtful as possible.

According to Technology Review, this includes “identifying who might use the technology, who might be harmed by it, what those harms might be, and how they might be avoided—both before the system is built and once it is up and running.” A co-author of the guidelines also informed Technology Review that “there are no other guidelines that exist, either within the DoD or, frankly, the United States government, that go into this level of detail,” something they apparently thought would comfort us.

In harm’s way — Developed over the span of nearly a year-and-a-half, the guidelines present any potential future contract bidders with a handy how-to during the planning, construction, and implementation of their AI-related projects. In Phase One, for example, a flow chart of reflective questions is provided, including things like “Have you conducted harms modeling to assess likelihood and magnitude of harm?”

Of course, in many instances, these companies are designing AI to maximize the amount of harm inflicted on its targets. There is absolutely no mention of limiting or discouraging lethal autonomous systems (for instance, advanced, armed drone fleets) meaning there is obviously no impediment to the DoD actively pursuing the smartest, deadliest AI programs possible. It just wants you to know that going forward, it’s going to make sure its contract awardees sit down and have a really good, hard think about the whole terrifying endeavor.

Already skewing the truth — It doesn’t inspire a whole lot of confidence in the Responsible AI Guidelines framework to also hear that the DoD is misrepresenting the facts right out of the gate. The military alleges it consulted a wide array of AI experts and critics for this project, but already the contributions of one person named in the guidelines have been purportedly exaggerated.

The DoD names Meredith Whittaker — a former Google employee who previously led protests against the company accepting military contracts — as a consultant, but a spokesperson for New York University’s AI Now Institute (where Whittaker is now faculty director) told Technology Review that Whittaker only met with program officials once, and that was to raise concerns about its direction.

“Claiming that she was could be read as a form of ethics-washing, in which the presence of dissenting voices during a small part of a long process is used to claim that a given outcome has broad buy-in from relevant stakeholders,” the spokesperson explained.

You’re off to a great start, DoD.