Tech

Microsoft's made new anti-deepfake tech to fight election misinformation

But the company also warns that AI will be outrunning it again in no time.

Two months out, the 2020 presidential election is increasingly stress-inducing. The president is trying to slow down the postal service and convince voters that mail-in ballots will lead to election fraud. Both Facebook and Twitter have caught multiple disinformation networks attempting to spread lies and push political agendas. The last thing we need right now is more potential for the election process to go awry.

Microsoft agrees — and the tech giant is taking matters into its own hands. The company this week announced two new technologies to combat disinformation, as well as work to educate the general public about what’s quickly becoming a very pressing issue.

This problem isn’t limited to just the current election, either. In its announcement, Microsoft cites a Princeton study that found 96 foreign influence campaigns targeting 30 different countries between 2013 and 2019. Only 26 percent of those campaigns targeted the U.S.; the rest targeted countries ranging from Armenia to the Netherlands to Brazil and South Africa.

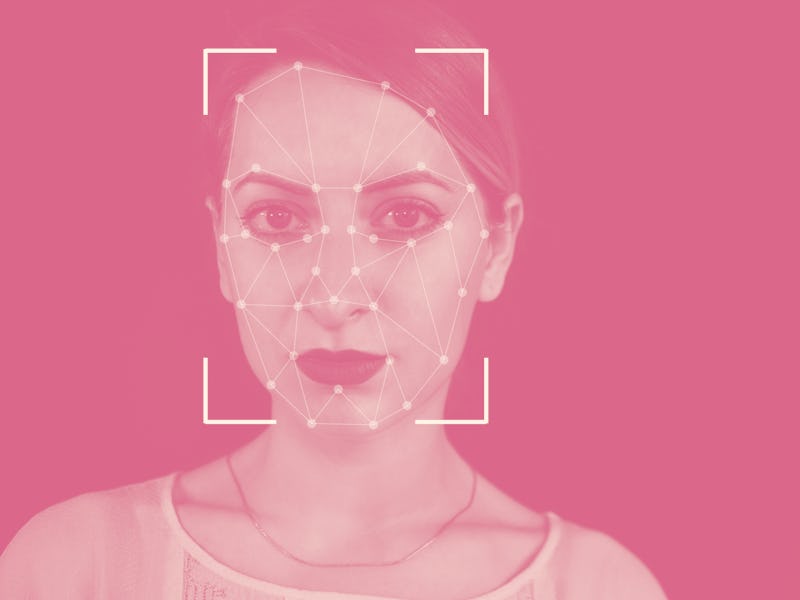

Say goodbye to the fakers — Microsoft sees deepfakes — AI-manipulated videos that look like the real deal — as one of the most pressing forms of misinformation moving forward. We’ve heard other experts speak to this escalating concern: cases like extortion, terrorism, and even deepfake porn show just how quickly deepfakes can turn dangerous.

That’s why it created the Microsoft Video Authenticator, a media analysis program that determines the chances a photo or video has been manipulated. The Video Authenticator uses AI to provide users with a percentage chance, called a “confidence score,” that the media might be artificially manipulated. For videos, the software can even provide a realtime frame-by-frame analysis. The technology works by detecting subtle fading or greyscale elements that might not be visible to the human eye.

Microsoft is also in the process of releasing a series of digital signatures that can be added to a video’s encoding to prove its authenticity, along with software to then ready those signatures and flag their accuracy to users.

Education is the best strategy — As Microsoft mentions in its announcement, the problem of deepfakes isn’t going away any time soon. We can try our best to detect them for now — but the AI that makes them possible is, by its very nature, still learning. That means our detection methods will soon be outdated.

For this reason, it’s critical to not just create software that identifies deepfakes but also to educate the public about spotting them. The skeptical among us will run a quick Google search to check if our facts are correct, or perhaps a reverse image search if a still looks suspicious, but when it comes to a video we’re much more willing to believe what our eyes are telling us. That needs to change, sadly.

We need to learn to be better at spotting manipulated media and misinformation. To this end, Microsoft is partnering with the University of Washington, USA Today, and AI firm Sensity to create interactive quizzes and help the general public work on their media literacy.

Notably absent from Microsoft’s announcement is a discussion of how accurate its new software is. Up until now, the best deepfake detector we’ve seen has been accurate just 65 percent of the time. We hope the Video Authenticator has a better track record than that.